In this 2 part blog series, we’re going to give you sneak previews of an upcoming eBook that Overit is developing on the topic of AI Optimization for Websites. These 2 blog posts are excerpted from the eBook and focus on advice for content and editorial teams. If you’re looking for clear, practical and actionable tips for writing content with AIs and LLMs in mind, then keep reading!

In the Part 1 post, we covered some more “micro” writing tips that apply at the page or article level. This Part 2 post will cover more “macro” high level considerations for your overall content marketing architectures and strategies.

Before we dive in, here’s a video of a recent discussion I had on AI-SEO with my colleagues at Overit.

Watch: Overit Webinar on AI-SEO from July 2025

Create Content that AI’s “Want” to Reference

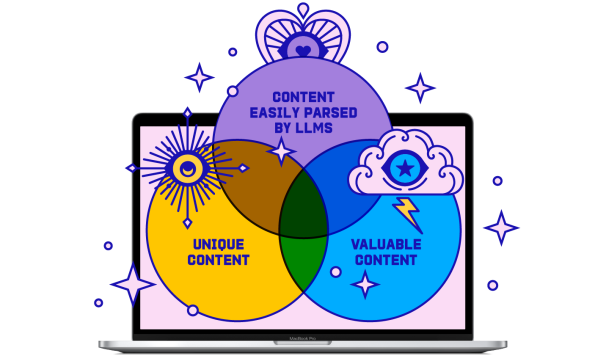

Obviously, LLMs do not have actual preferences or desires. However, in terms of the types of online content they most often surface and cite, there are some recurring traits that we can look to emulate.

To start, try your best to provide original thoughts and insights on a topic, as well as actionable tips or suggestions, and relevant supporting data to back up your claims. There’s a lot of generic “fluff” content on the web. Ironically, a lot of it now was actually written by LLMs! These pieces of content are hard to distinguish from one another because they all provide the same surface information but no real deeper value or meaning.

However, pieces of content that provide genuine and unique “value-add” for users that they simply can’t find on a similar page are far more likely to see citations from AIs. Three key questions to editorially ask about your website content are:

- Where do I provide something unique to users they can’t simply find elsewhere?

- Where do I provide something actually valuable to the user?

- Where can LLMs most easily parse my content due to organizational structure, clear semantic labeling, and topical clarity?

Where the answers to those 3 questions intersect are the most likely areas of your website to get surfaced and cited by LLMs:

Shift from Keyword Optimization to User Intent Optimization

In 2025, it’s a bit of a simplification to say that search engines are limited to just “keyword” searches. A lot of the same techniques that LLMs utilize for deeper topical understanding were in fact first pioneered by search engines.

However there are clear differences in user behavior for how users interact with search engines vs LLMs. Search engine users still tend to write out their searches in non-grammatical and shorter query strings that are composed largely of “keywords”. For most of the history of SEO, optimizing your content for those very keywords was a fundamental practice.

Users interact with LLMs, however, in a far more natural and conversational manner. They will typically speak in complete and grammatically correct sentences. The LLMs do pay attention to the individual words within the sentences, yes, but they demonstrate far less focus or emphasis on any individual keyword than they do the deeper meaning of the prompt. LLMs will attempt to assess the underlying user INTENT of the prompt. This means they don’t fixate as much on what the user says, but rather what the LLM believes they want to learn, see, or do.

Behind every prompt or search engine query is a real human with real informational needs and wants. LLMs cut through the “noise” of keyword based information retrieval approaches that search engines mastered and focus more on user intents and task completion. Indeed, many unique keywords can all represent the same underlying user intent and conversely, one ambiguously written keyword string could potentially represent several different user intents.

This means that your content strategy as a whole should not “chase” keywords or individual keyword rankings, but rather chase users and user intents. Keywords as a paradigm represent isolated and one-off searches.. But content optimized for broader user intents are ideal for being surfaced in a longer and deeper conversation with an LLM. Most humans don’t stop with just one search or prompt. They iterate, refine or expand, and sequence searches in meaningful ways. LLMs, as probabilistic and predictive (rather than deterministic) models, are very apt at guessing at which prompts (or questions) a user might have further.

Indeed, recently revealed Patents within the AI space show they utilize “query fan-out” processes. This means that when you enter a prompt into an LLM, when it searches the web for information, it’s not just searching for the initial prompt you provided. Rather, it predicts the other related searches you might make next or that are contextually relevant to your original query.

For example, if you search “how to loosen tire lugnuts” in a search engine, you’ll probably get an authoritative but otherwise granular and keyword optimized piece of content on how to do that, but probably only that. But an LLM will ask itself: “WHY does the user want to loosen lugnuts?”, to which it will ascertain you are probably attempting to change a flat tire. So, the LLM will search the web and surface content and citations that cover that broader topic (and sequence of steps) as a whole.

Search engines also embrace some aspects of query fanning. You’ve probably seen the familiar “People Also Ask” modules within many Google search results, for example. These features formed the conceptual basis of the query fanning approach. But they are also still simpler in that they simply link to another (isolated) search engine result page. What the LLMs are doing are far more comprehensive and journey-based, however, even within the scope of a single prompt.

Developing AI-Friendly Content Architectures

The inherent “broadening” of prompts by LLMs suggest that some topical “architectures” are more effective than others on websites for being cited by LLMs. While it was always an SEO best practice to have useful and contextually relevant internal links to other pages on your site within your content, it was not per se a requirement to rank.

A webpage that had sufficient backlink equity and was optimized for a very specific granular keyword could rank well for that keyword and that keyword alone, in isolation and siloed off from other pages. Another page might also rank in a silo for another keyword, and so on. Taken to excess, websites can often function as a loose collection of doorway or landing pages, a content farm made up of silos. User Experience (UX) and the broader user journey aren’t emphasised much at all.

But to optimize for LLMs, you want to not only appreciate the more intent and journey based approach they take (versus keyword level focus), but actively embrace it. This means content architectures that can provide the broader topical coverage that LLMs are attempting to distill down for the user, all while being organized modularly. This may seem a bit contradictory or counter-intuitive at first. Modularity implies that the content is written (and interpreted) at more of a micro level, while topical breadth and coverage suggests more macro levels. But just as a very complex machine exists as a whole but consists of very basic, simple and recurring parts, so too can an effective content architecture be both modular and demonstrate topical breadth at the same time.

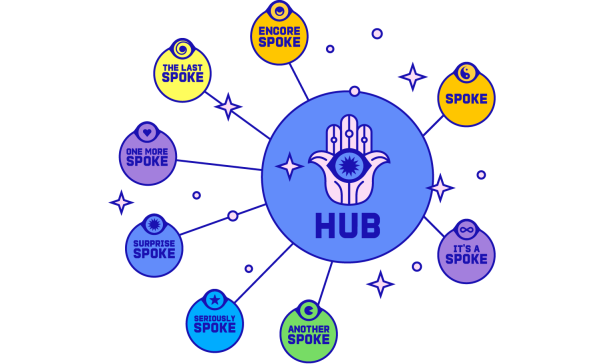

There are many different approaches to content architectures and inventories that will lend themselves to success with LLMs. Many of them are quite similar to one another in lots of ways and different in others, and sometimes different professionals will use different names for them even though they embrace the same fundamentals. We don’t want to debate verbiage too much, so we will simply point to two fairly well known content architecture approaches: Hub-and-Spoke frameworks and Pillar Pages.

In a Hub-and-Spoke framework, there is for any given topic (or subtopic!) a “hub” page. This page exists in the conceptual “center” of a topic and attempts to cover and summarize the topic at a very high level. It exists to identify the most important and relevant aspects of the topic as a whole, providing topical breadth. The hub page, however, also incorporates several or many internal links to other related pages.

These are the “spoke” pages. They circle around the topical center. They go into more granular detail about these more singular aspects or subtopics of the main topic. Even though they cover one thing (versus the many things covered by a hub page), a spoke page can be just as long as the hub page, precisely because of how “deep” the content and information provided can go. These pages compliment the initial breadth of the hub page with topical depth. They too can internally link to other related and relevant pages that make sense within the context of the content. These internal linking pathways foster user exploration that can mirror real-life user journeys. Together, the hub and spoke framework provide both the topical breadth and depth on a topic needed for success in LLMs.

Another approach is the Pillar Page strategy. These pages also identify the most important aspects and subtopics of the main topic. However, instead of linking out to several other pages dedicated to those discrete subtopics, the Pillar Page will go into greater detail right there on one much longer-form page. It will section out content, yes, but each section can be quite long. As such, a Pillar Page is often longer than a Hub page, sometimes much longer. Using headings, titles, and section labels as suggested earlier are critical when utilizing a Pillar Page. This will help the LLM easily “chunk” out and parse the different pieces of the content. Even the longest page of content can still be highly modular.

There are no definitive rules for when to use a Hub-and-Spoke framework vs a Pillar page approach. They both have strengths and weaknesses and content marketing stakeholders might reflect on their own organizational structures and philosophies when deciding. However, a few practical considerations might include:

Pillar Pages:

- Use Pillar Pages when you want to present a comprehensive guide to a topic as a single resource.

- Use Pillar Pages when you have only a few or a single potential action you want the reader to take.

- User Pillar Pages when you have or desire a consistent and largely singular content medium (Text, Video).

Hub-and-Spoke:

- Use Hub-and-Spoke frameworks when you want to foster different User Journeys across multiple pages on the site.

- Use Hub-and-Spoke frameworks when you have several potential actions you want the reader to take.

- Use Hub-and-Spoke frameworks when you have several different content mediums or formats you want to leverage (Blog posts, videos, interactivity/widgets).

Common Content Types and Formats Cited by LLMs

For many organizations, the content types on your website that are most likely to get surfaced by LLMs might include:

- Case Studies

- Whitepapers

- FAQ’s

- Glossaries

- Tables

- Side by Side Comparisons

- Lists

- How-To’s and Explainers

- Etc.

This is not an exhaustive list by any means, but you can see that this diverse range of content formats all have specific and readily understood and parsed structures that in part imbue deeper meaning and purpose to the content. Any content format that meets these characteristics will be more AI friendly than others.

Besides the types and formats of content that are most AI friendly on your website, there are also a few common 3rd party websites that your organization does not directly own or control, but can still be visible on (and hence still referenced or mentioned by the LLM).

Some of the most commonly cited types of websites cited include:

- Government sites (federal, state, local)

- Non-Profits and NGOs

- Educational sites (.k12 and .edu websites)

- Vertical Specific “Big Box” sites (Angi, Yelp, FindLaw, HealthGrades, GlassDoor, etc)

- Vertical specific Forums (StackOverflow, GitHub)

- Broad Forums (Reddit, Quora)

- Mass market media (NYT, Forbes, Business Insider, Mashable, Verge, etc)

- Any other sites that provide parsable, unique and valuable content.

As LLMs continue to be used for conversational search, some in the SEO industry suggest that we keep the acronym but rename it ‘Search EVERYWHERE Optimization”. This means not just optimizing your website and content, but ensuring that your organization and Brand have visibility and engagement on other areas of the web that are most relevant (and impactful) for your vertical.

That’s it for this 2 part blog series on writing content for AI optimization. If you liked what you read, stay tuned in the coming months for our upcoming eBook that’s full of even more information and advice on how to best optimize your website for the growing trends in AI based search.